Should You Skip Code Reviews?

“Code reviews are a waste of time, they slow us down and don’t provide any benefit” - this is how someone who wants to skip code reviews might argue. And there is some truth in the statement. Code reviews take a lot of time, about 5-6 hours per week and developer, and they introduce delays. Should we therefore skip them?

To answer this question, we need to take a look at why we perform code reviews. First and foremost, code reviews are a quality control mechanism to mitigate risk. By reviewing code, we reduce the likelihood of certain negative events occurring. The type of events will depend on your company, but common examples include:

- a bug affecting your production system causes financial loss

- a team member leaves the team and nobody knows how their code works

- there is no consistency in the code base and it becomes unmaintainable over time

The question boils down to a risk assessment. For example, is reducing the likelihood of financial loss due to a bug in production worth the time and money spent on code reviews? There is no universal answer to these types of questions. It depends on your environment. How big would the damage be and how likely is it to occur? Do you have other methods in place that can help to mitigate the same risks? The fact that code reviews have become an industry standard seems to indicate that conducting code reviews is a positive for most organizations though.

In this blog post, however, I don’t want to discuss whether you should perform code reviews in general. Instead, we take a look at several cases where teams might decide to skip code reviews and whether or not this is a good idea.

Skipping reviews for small changes

In some teams, code reviews are voluntary and are only performed for changes that the author deems to be dangerous or complex. At first glance, this seems to make sense. You are more likely to introduce bugs when changing thousand of lines instead of just a couple ones. While this is most likely true, it doesn’t really matter though. The maximum damage caused by a bug does not depend on the number of lines changed. Let’s look at an example to illustrate this.

In 2006, a Debian Maintainer wanted to suppress a noisy warning that was printed when running OpenSSL under Valgrind. He was able to track down the issue to two lines of the OpenSSL source code and fixed it by adding a tiny patch to Debian:

It took about 20 months till someone else figured out the full consequences of the change. To get rid of the warning, the maintainer had commented out the two lines, which according to Valgrind depended on uninitialized data anyways. By doing so, he broke the random number generator of OpenSSL, which could now only output 32767 different values. All SSH, OpenVPN, DNSSEC and other keys generated by the affected Debian OpenSSL versions were therefore easy to bruteforce and had to be replaced immediately. This often required manual steps, even to the point of reissuing paid SSL certificates.

Skipping code reviews based on the size of the code change is therefore not a good idea in my opinion. Especially since small changes are often reviewed quickly anyways.

Skipping reviews due to deadlines

To find out whether you should skip code reviews due to a tight schedule, you need to reevaluate the risk assessment from the introduction. Is the potential harm of missing the deadline higher than the potential damage of skipping code reviews? Is it unlikely that you will be able to meet the deadline if you do code reviews? If you can answer yes to both questions, you should skip the code reviews. You may still review the code afterwards.

This becomes dangerous when you are constantly under pressure to meet the next deadline. You are more likely to take shortcuts, introduce unmaintainable code that quickly fixes an issue, or ignore edge cases. If you also skip code reviews, no one will stop you from taking it too far and more bugs will slip through. If this is your standard way of working, you should first change your project or task planing, before thinking about code reviews though.

Skipping code reviews because there are tests

Can code reviews be skipped if the code is well tested? We can easily answer this question by revisiting the Debian OpenSSL example. By breaking the random number generator in a way that it was still using a tiny bit of entropy, no test failures were triggered. Tests are an important part of detecting unintended side effects, but they cannot prove the absence of bugs.

, provided under the [CC-BY-3.0](https://creativecommons.org/licenses/by/3.0/) license. Cartoon by Geek & Poke](/images/blog/skip-code-review/tdd_small.png)

In my opinion, tests should not replace code reviews, nor should code reviews replace tests. Since reviews are usually performed by someone other than the person writing the tests, combining the two approaches provides the greatest chance of detecting issues.

Code reviews only for new team members

Some teams only review code of new team members. Paying special attention to their code contributions is definitely a good idea. Code reviews can be used here to share knowledge and introduce them to the coding standards and norms used within the team. Do code reviews lose their usefulness the more senior a team member becomes though? An argument often used in this context is that experienced developers tend to introduce fewer bugs.

The recently discovered Dirty Pipe vulnerability in the Linux Kernel is a good counterexample. A developer with at least 24 years of experience in working with the Linux Kernel code made the simple mistake of not initializing a member variable of a structure. Combined with another code change from a developer with 22 years of experience, it was possible for any user to escalate their privileges to root.

It would be dangerous to assume that even the most experienced developer could write code without bugs or that their consequences are less severe. Apart from the fact that humans will always make mistakes, the other benefits (sharing of knowledge and responsibility, etc.) outweigh the delays of code review in my opinion. After all, more experienced developers tend to write code that passes code reviews quickly anyway.

Only certain code is reviewed

Should you only review mission critical code? What about internal scripts? Templates? Documentation?

At the end of the day, it comes back down to risk assessment. Is your code decoupled from any mission critical code? If not, a segmentation fault or memory leak could still take down the rest of the system. Is it a problem if nobody knows how the code works, or can it be rewritten in minutes anyway? A typo in a template or the documentation is most probably not going to cause any issues. But anyone who has worked with hardware or black box software components will know how much time you can waste with an incomplete or incorrect documentation.

I think you should use a pragmatic approach here, for example, use a review workflow, but don’t be too picky. Skim through the changes, so you can catch any obvious mistakes without introducing any long delays. If the schedule gets too tight, skip it.

How to avoid skipping reviews

There are valid reasons to skip code reviews, but often enough it is done for the wrong reasons. In general I would suggest to first check if you can improve your current workflow before skipping code reviews. Your goal should be to minimize the time it takes a developer to review the changes. Try to automate as much as possible. Use a code formatter to avoid coding style discussions. Use linters to detect trivial mistakes, so a human doesn’t need to point them out. Make sure your CI passes before someone tries to review the changes, etc.

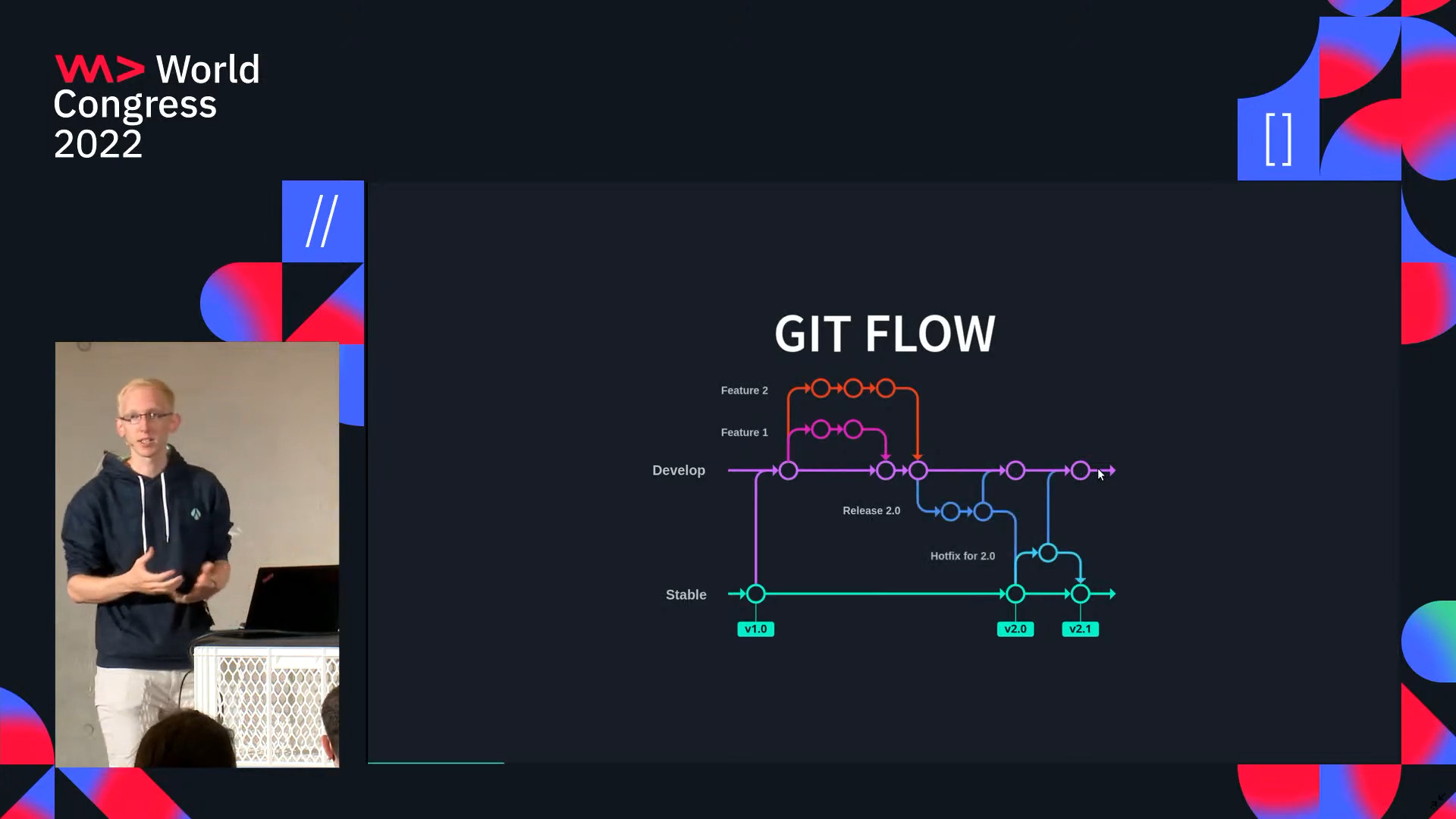

Also make sure that your team members know how to use Git efficiently. If you don’t know how to easily split your changes or how to modify and test multiple existing commits, code reviews become a whole lot more difficult. If you need a starting point, take a look at our talk at the WeAreDevelopers World Congress 22 on how to use Git for code reviews:

If you want to increase the efficiency of your review process even more, try out our code review tool MergeBoard. It does not only hide format/style changes, but also adds useful annotations to understand code changes faster. Similar changes are grouped together and you can see how code blocks have moved. Start your 14-day trial to discover all the other differences.